UNCERTAINTY QUANTIFICATION

The importance of controlling variability is well recognized in disciplines such as financial risk management. Recently, robust optimization techniques have started to gain attention within the engineering and scientific communities because many real world optimization problems in many different disciplines contain uncertainty.

Dealing with Uncertainty at the Design Stage

Uncertainty may caused by a number of different factors. It could be due to errors in measuring and/or difficulties in sampling, or can depend on future events that cannot be forseen with certainty (uncontrollable disturbances and forecasting errors).

The fact is that the presence of uncertainty makes the traditional design and optimization approaches insufficient. Robust optimization is a methodology which aims at solving problems where any type or source of uncertainty is present in the data or in the model.

The design under uncertainty methodology provides a systematic way to evaluate options and quantify unknowns, leading to improved decision-making.

The Numerical Aspects of Uncertainty Quantification

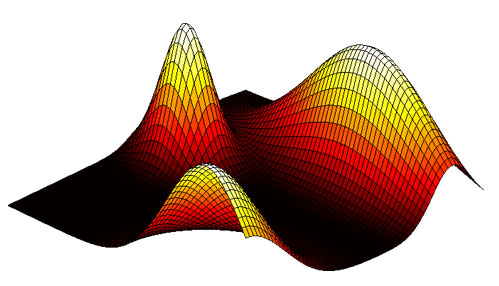

In many engineering design problems, the design parameters are known along with some tolerance, or, in other cases, they may be described only by means of a probability distribution such as a Gaussian distribution and its statistical moments such as the mean, variance or median. It follows that the design of a product for a specific operational scenario does not guarantee its good performance for all other scenarios.

Deterministic approaches to design optimization do not consider the impact that variations on the input parameter (or on the scenario variables) may have on the product’s final performance. As a result, a design solution may be very sensitive to these variations, with a very poor average performance of the final product.

Therefore, these uncertainties should be included in the optimization procedures, so that prescribed robustness can be achieved in the optimal designs. Of course, taking uncertainties into account can be computationally expensive and requires a deep knowledge of the subject in order to reduce the total number of evaluations.

Why should the Design of a Process consider Uncertainty Quantification?

Design approaches that only consider deterministic behavior tend to "over-optimize", producing solutions and products that may perform well under some specified conditions but which can have poor off-design characteristics. This is because in reality uncertainty and variability are innate to any process system and thus the expectations on all alternatives can never be computed with certainty.

In spite of the computational complexity gap between the classical deterministic design and the design under uncertainty approach, the results obtained by taking into account all sources of uncertainties are the most reliable results. However, in real life managers are forced to find an optimal compromise between the profitability and the reliability of a given solution.

Many different approaches have been tried to address uncertainty within process optimization models, the most classic of these approaches being the use of Monte Carlo sampling. Since Monte Carlo sampling can be too computationally demanding, other newer methodologies have been studied, such as Latin Hypercube sampling and polynomial chaos, to simplify the evaluation of uncertainty.

Today it is imperative that designs are valid under all reasonable conditions of uncertainty and risk. By taking into account uncertainty, engineers have a better guarantee of presenting a solution that is extremely robust and of high quality.